Stay on top of what’s happening in AI marketing news with our monthly recap.

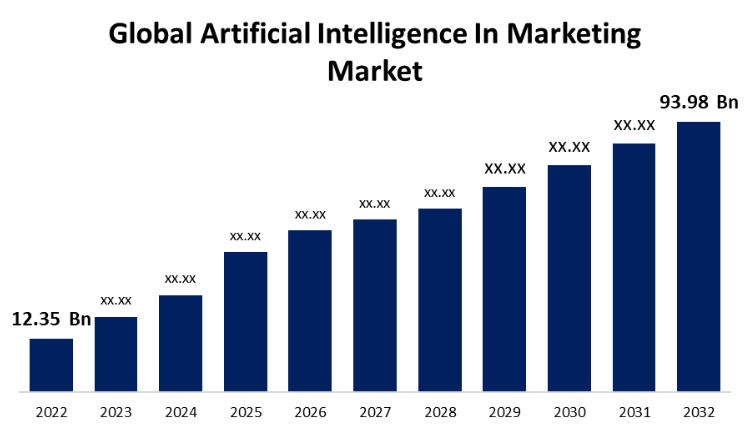

Just in case you were worried about things fizzling out anytime soon, Spherical Insights has forecasted that the Global AI Marketing Market will reach $93.98 Billion by 2032. Valued at 12.35B in 2022, the estimated growth would require a compound annual growth rate (or CAGR) of 22.5%.

During the same period, the natural language processing (or NLP) segment of AI is anticipated to grow even more quickly, with CAGR of around 22.3%.

The NLP segment accounts for AI systems that understand and interpret human language, allowing customers to interact with chatbots and voice assistants, and allowing companies to conduct sentiment analysis via the unstructured data of social media posts, customer reviews, and emails.

AI Marketing news Reflecting that growth…

DigiDay reports that Publicis Groupe, the global advertising and PR company, has unveiled its new AI platform CoreAI as part of a €100 million AI investment for 2024. CoreAI, to be showcased at VivaTech, is focused on media planning and creative production. Following the announcement, Publicis’s stock price surged to a record high.

New AI Marketing Tool to Cut Production?

AdAge Reports on a new AI service from Tool, designed to cut production time and costs out of advertising. “Toolkit” bases its images on a dataset representative of a brand’s products and “visual DNA.” The generated images reduce the need for physical photoshoots and require minimal finishing touches.

Responding to the ethical concerns of using AI-generated models, particularly for high-profile advertising campaigns, Tool’s President asserted that “If we’re going to use AI-generated models, the people who those are based on will be paid.” But he also admitted that the details of those payments remain unclear.

In a much more egregious example of problems surrounding generative AI…

Fast Company reported on the recent explicit AI-created images of Taylor Swift flooding the internet and called for preventative action from tech companies and regulators to protect women and marginalized communities from the harms of deepfakes, emphasizing the need for a systemic change in addressing such digital sexual abuse.

And speaking of AI regulation…

After landmark AI regulations in the EU late last year, and developing regulations and priorities in China, the US approach to AI policy is emerging as industry-friendly, emphasizing best practices and sector-specific regulation, while relying on AI companies’ cooperation. Relying on President Biden’s executive order, and government agencies outlining best practices for AI, Brookings is calling for congressional action.

With more calls to action sure to come, MIT looks forward with predictions of what the next 12 months of AI regulation will look like. Key issues include addressing AI’s harms and risks, like bias and inaccuracy, and managing the debates over high-risk AI systems. AI is also becoming a focal point in techno-nationalism, particularly in the US-China technological rivalry. Additionally, the rapid deployment of generative AI in 2023 is likely to significantly impact the 2024 elections, with concerns over political disinformation and the need for stronger safeguards and policies. All in all, the future of AI regulation is sure to be challenging and dynamic.

And for the broader creator economy…

OpenAI has launched their GPT Store which functions like an app marketplace. Wired outlines how ChatGPT Plus subscribers can create and publish custom chatbots.

Users can develop their own ‘GPTs’ (generative pretrained transformers) without advanced coding skills. The platform, emerging amid OpenAI’s legal and regulatory challenges, requires adherence to content guidelines, prohibiting bots for romantic purposes, impersonation, law enforcement automation, political campaigning, and academic cheating.

While creating a GPT is accessible, the potential for earning through the store remains uncertain due to unclear payment plans and OpenAI’s ongoing legal and regulatory issues including what’s been called the “existential threat” lawsuit brought by the New York Times.

And lastly, TikTok’s testing the waters of generative AI music with “AI Song.” While Verge reiterates that the name is temporary and the melodies come from a pre-saved catalog, so far the tool is providing mixed results. Many of the lyrics are nonsensical, which is to be expected:

@jonahcruzmanzano How to create a song using AI on TikTok. 🎵 Explore the world of AI music on TikTok! Join me in creating catchy Pop, EDM, and HipHop songs using simple prompts and words. 🤖✨ Let's make music together! 🚀🎶 What is AI Song? AI Song is an experimental feature available on TikTok, utilizing artificial intelligence (AI) to generate songs based on prompts you input. How does AI Song work? The lyric generation in AI Song is driven by Bloom, a robust language model employing machine learning to produce text. It's important to note that lyrics generated by this model might include errors, and the same lyrics may be generated for multiple users utilizing the feature. For your security, refrain from sharing personal or confidential information while using AI Song #tiktok #tiktoknewfeature #tiktokai #tiktoknews #tiktokupdate ♬ Vibes - ZHRMusic

But it also appears that AI has issues being out of tune:

@kristileilani What is TikTok AI Song? The new experimental feature, powered by the Bloom LLM, lets creators generate unique songs and lyrics for videos and photos. 🎧 #tiktoknews #newfeature #generativeai #aimusic #aimusicvideo #tiktokai #tiktokaisong #bloom #llm #macinelearning #whatisit #testing ♬ I'm an a i - Kristi Leilani

And no, unfortunately, we do not yet have the next “Heart on My Sleeve.” TikTok has also updated its policies for better transparency around AI-created content.

But AI Song is just getting going and its progress reflects the exciting new possibilities the year holds for multimodal AI.